Docker容器中运行ES,Kibana,Cerebro

1.所需环境以及配置文件

环境:Docker

配置文件:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

| version: '2.2'

services:

cerebro:

image: lmenezes/cerebro:0.8.3

container_name: cerebro

ports:

- "9000:9000"

command:

- -Dhosts.0.host=http://elasticsearch:9200

networks:

- es7net

kibana:

image: docker.elastic.co/kibana/kibana:7.1.0

container_name: kibana7

environment:

- I18N_LOCALE=zh-CN

- XPACK_GRAPH_ENABLED=true

- TIMELION_ENABLED=true

- XPACK_MONITORING_COLLECTION_ENABLED="true"

ports:

- "5601:5601"

networks:

- es7net

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:7.1.0

container_name: es7_01

environment:

- cluster.name=xiaoxiao

- node.name=es7_01

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- discovery.seed_hosts=es7_01,es7_02

- cluster.initial_master_nodes=es7_01,es7_02

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- es7data1:/usr/share/elasticsearch/data

ports:

- 9200:9200

networks:

- es7net

elasticsearch2:

image: docker.elastic.co/elasticsearch/elasticsearch:7.1.0

container_name: es7_02

environment:

- cluster.name=xiaoxiao

- node.name=es7_02

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- discovery.seed_hosts=es7_01,es7_02

- cluster.initial_master_nodes=es7_01,es7_02

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- es7data2:/usr/share/elasticsearch/data

networks:

- es7net

volumes:

es7data1:

driver: local

es7data2:

driver: local

networks:

es7net:

driver: bridge

|

启动命令

1

2

3

| docker-compose up #启动

docker-compose down #停止容器

docker-compose down -v #停止容器并且移除数据

|

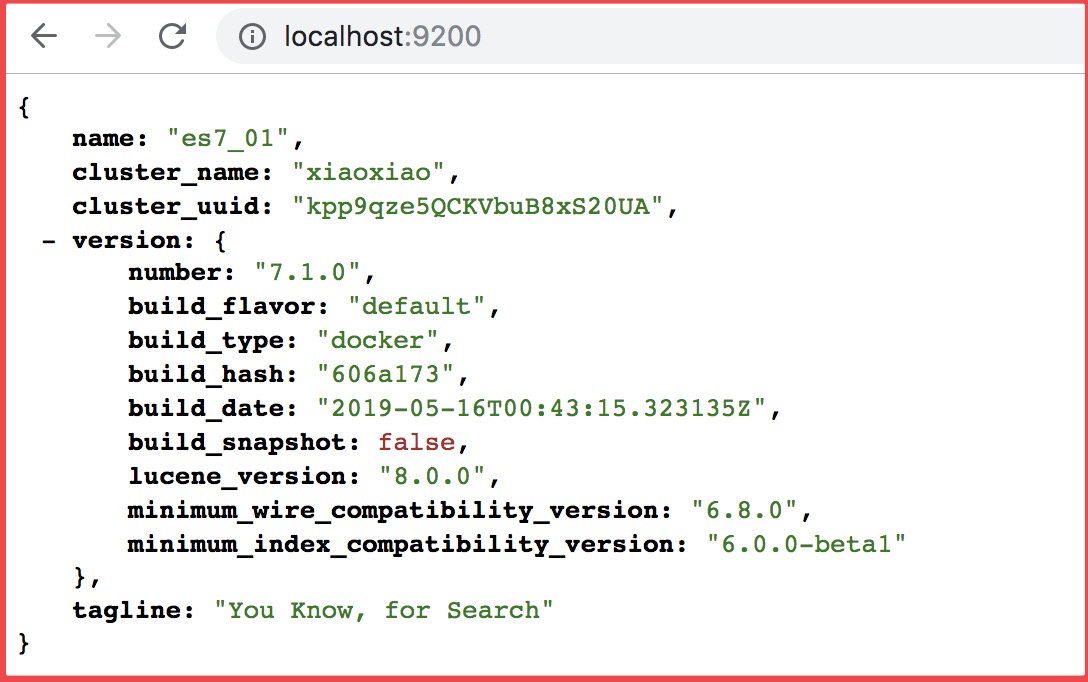

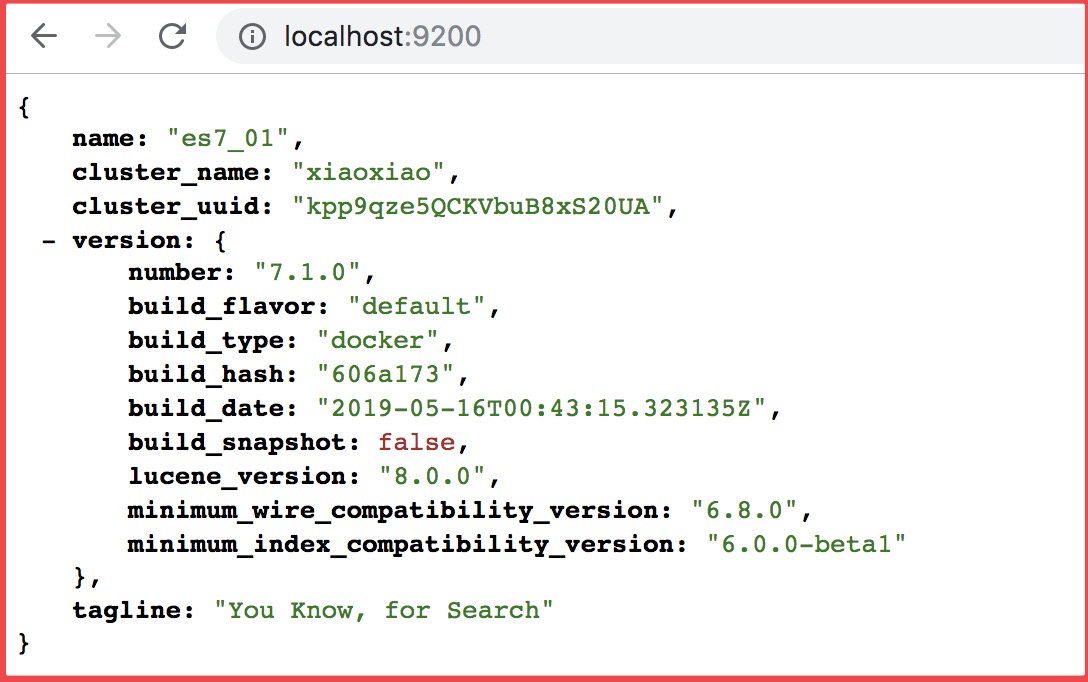

2.启动服务

查看是否成功

es访问地址

1

| localhost:9200 #ES默认端口为9200

|

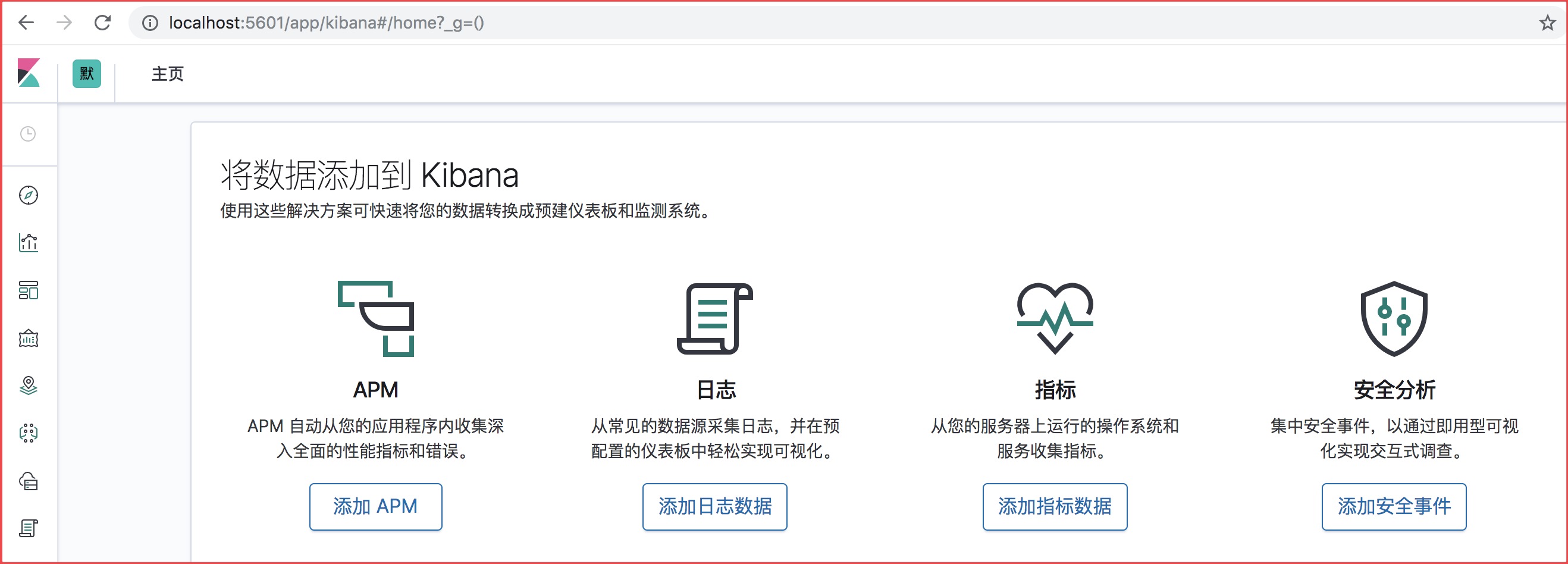

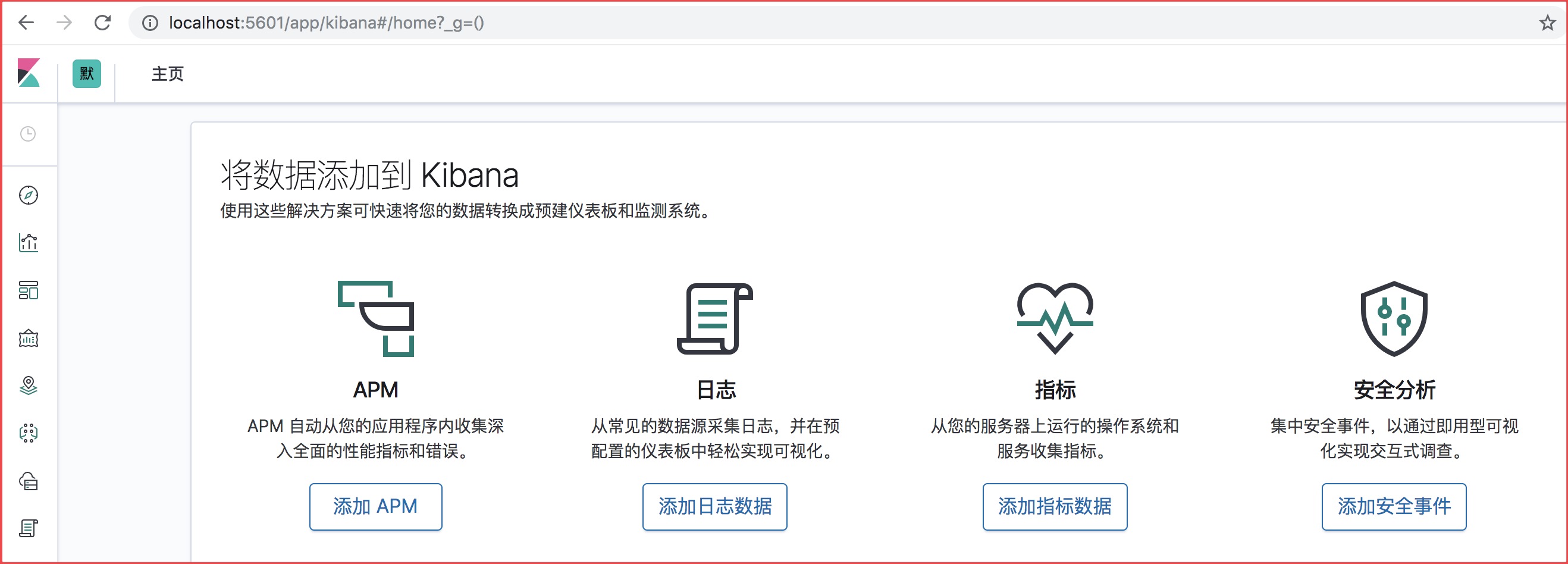

kibana访问地址

1

| localhost:5601 #kibana默认端口5601

|

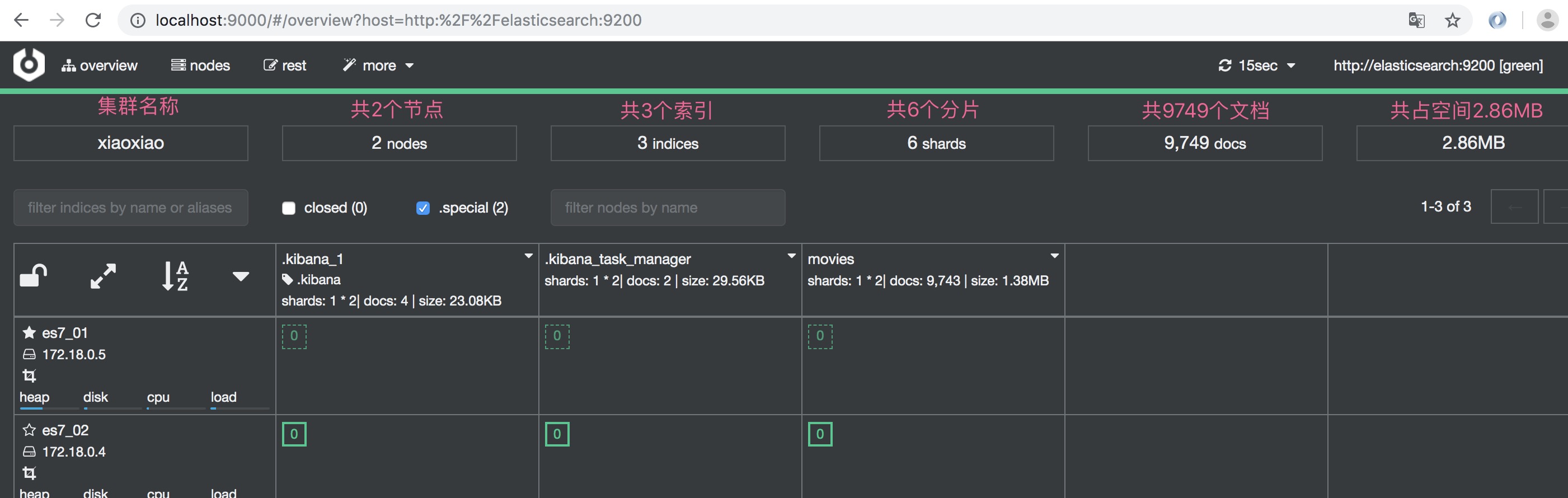

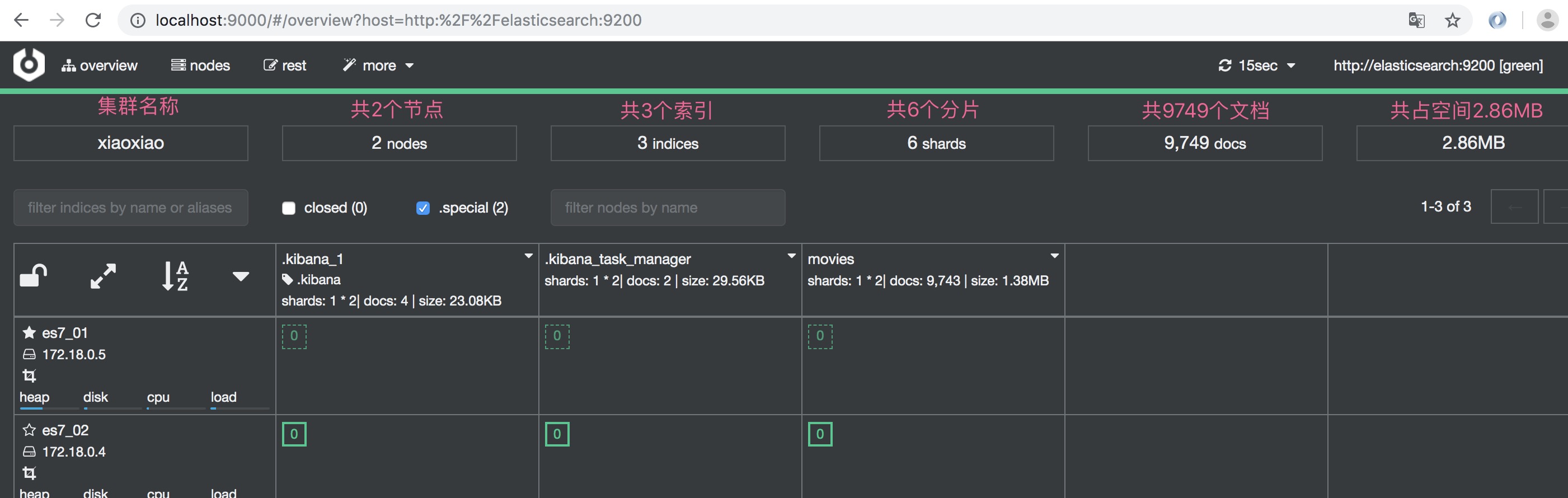

cerebro访问地址

1

| localhost:9000 #cerebro默认端口9000

|

3.Logstash安装与数据导入ES

注:Logstash和kibana下载的版本要和你的elasticsearch的版本号一一致。

3.1 配置movices.yml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

| # input代表读取数据 这里读取数据的位置在data文件夹下,文件名称为movies.csv

input {

file {

path => "/Users/xub/opt/logstash-7.1.0/data/movies.csv"

start_position => "beginning"

sincedb_path => "/dev/null"

}

}

filter {

csv {

separator => ","

columns => ["id","content","genre"]

}

mutate {

split => { "genre" => "|" }

remove_field => ["path", "host","@timestamp","message"]

}

mutate {

split => ["content", "("]

add_field => { "title" => "%{[content][0]}"}

add_field => { "year" => "%{[content][1]}"}

}

mutate {

convert => {

"year" => "integer"

}

strip => ["title"]

remove_field => ["path", "host","@timestamp","message","content"]

}

}

# 输入位置 这里输入数据到本地es ,并且索引名称为movies

output {

elasticsearch {

hosts => "http://localhost:9200"

index => "movies"

document_id => "%{id}"

}

stdout {}

}

|

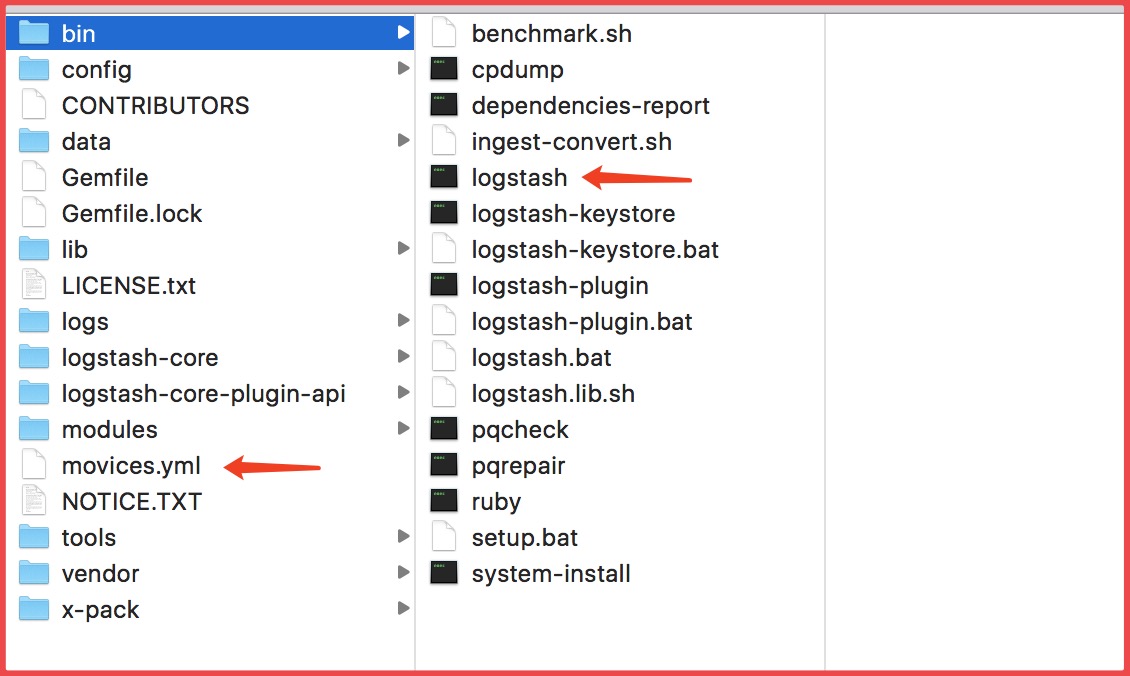

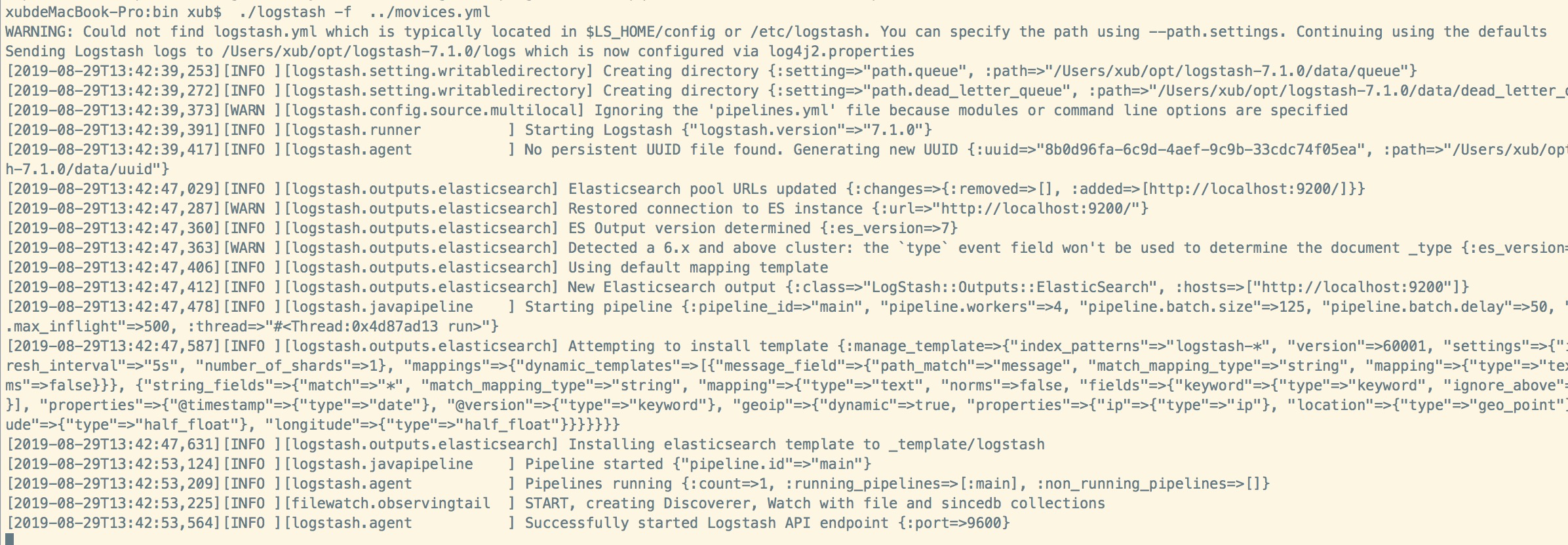

启动命令: 启动命令会和配置文件movices.yml的摆放位置有关,进入bin目录

1

| ./logstash ../movices.yml

|

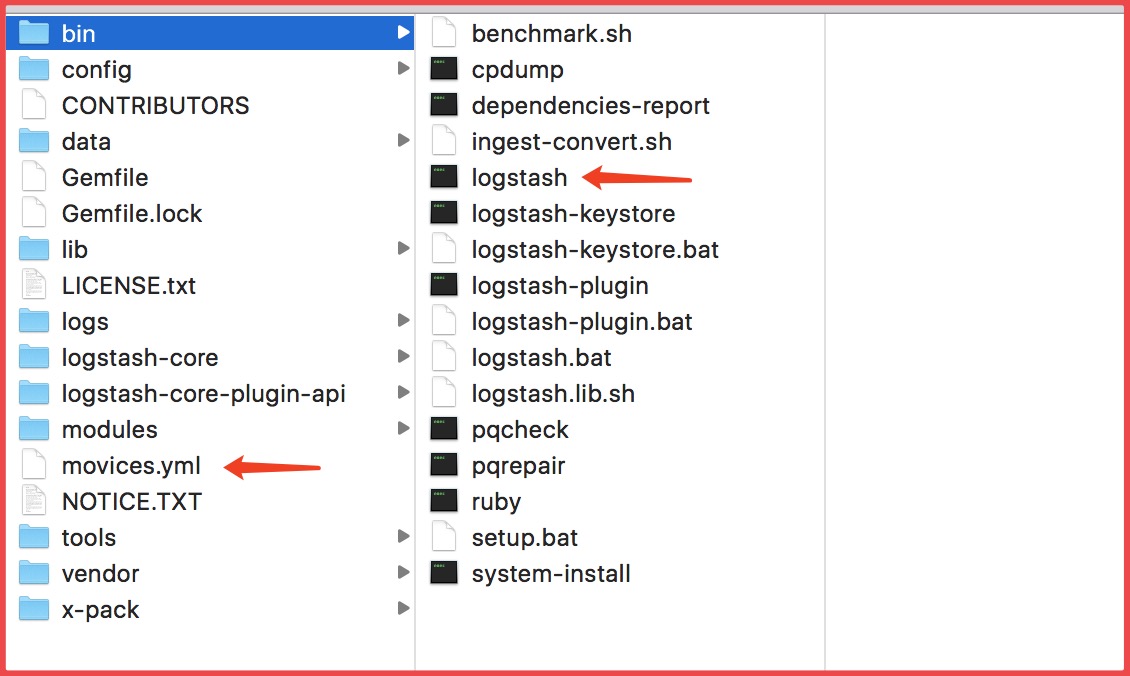

movices.yml存放的位置

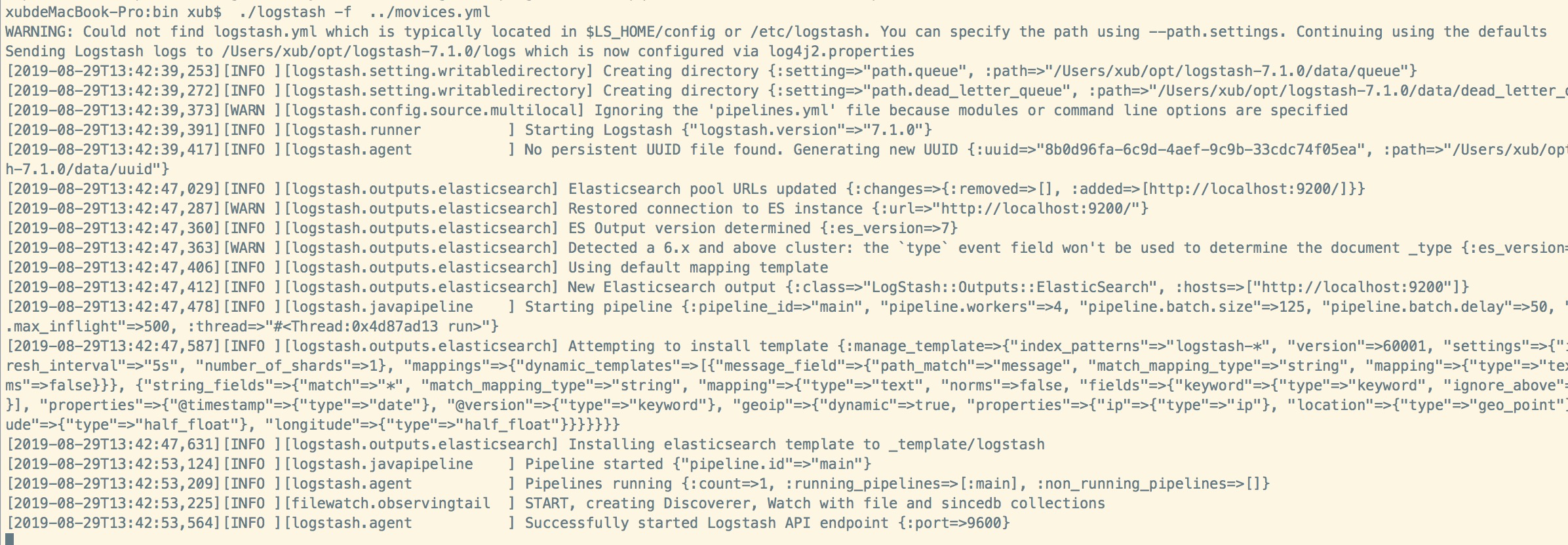

启动成功

This is copyright.